Server overload caused by Docker-based cron jobs: root cause and optimization. We noticed significant server overload after migrating cron jobs to run inside Docker containers. The number and logic of jobs hadn’t changed – only the method of execution had. This triggered a deeper investigation into the cause.

Identifying the Cause

The first step was to set up resource usage monitoring per process. We deployed process-exporter and created a dedicated dashboard. We attempted to distinguish load sources by:

- Python processes

- docker-compose processes

- Docker shim-related processes

Collected data showed that Python processes were responsible for most of the load. Docker-related processes didn’t appear to create substantial overhead. However, the question remained – why did overload start only after the switch to Docker-based crons?

Forming Hypotheses

We suspected two main reasons:

process-exportersampling interval might be too low, or its overhead too high (it crawls all of/proc, which, during cron bursts, can include over 5,000 PIDs)- Docker’s startup behavior might obscure real overhead: the container is prepared by

runc init, then performs multiple setup steps before the actual command runs. These transient resource spikes might be invisible in normal monitoring windows.

Previous Cron Setup

Before the optimization, cron jobs looked like this:

docker-compose run --rm app python script.py arguments

Each task created a new container that was destroyed after execution.

Optimization

We introduced a persistent background container to reuse:

docker-compose run --rm -d --name cron-runner app bash -c "while :; do sleep 60; done"

Then we modified crons to run commands via docker exec into the existing container:

docker exec cron-runner python script.py arguments

Results

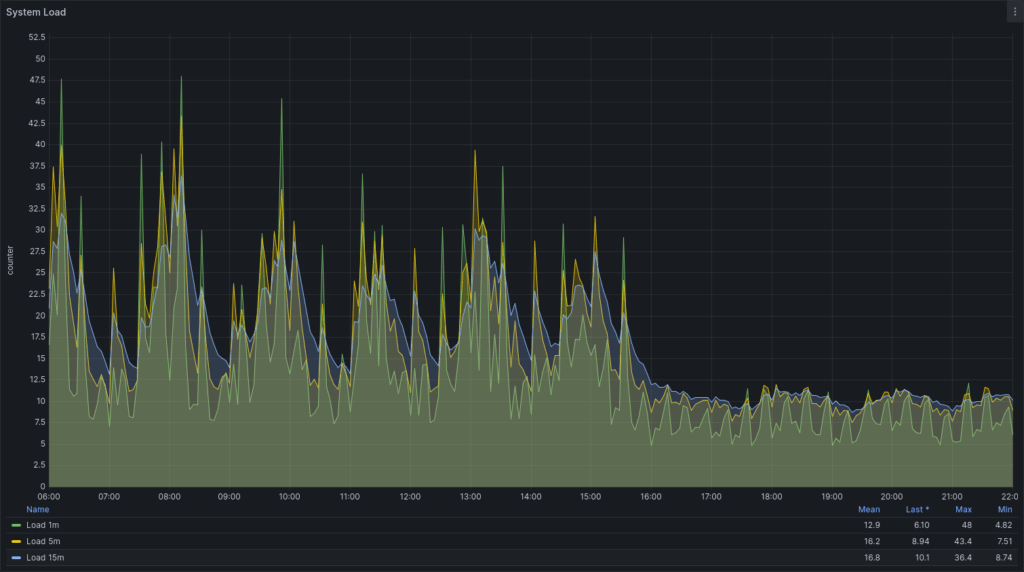

Load Average

After switching to docker exec, the load average dropped significantly. On the graph from 2025-03-11 (06:00 to 22:00), a clear decrease is visible after ~15:50 when the change was made.

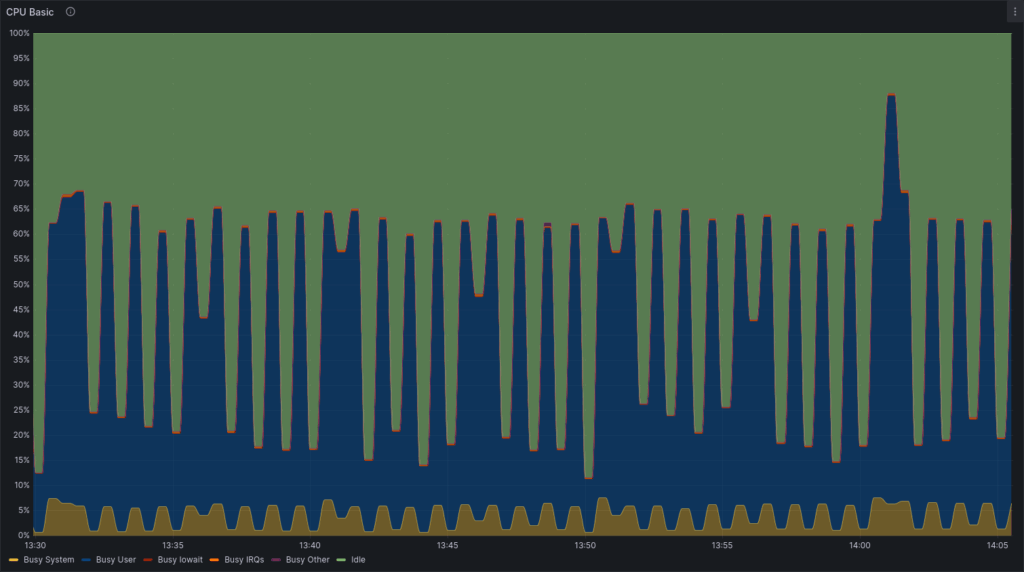

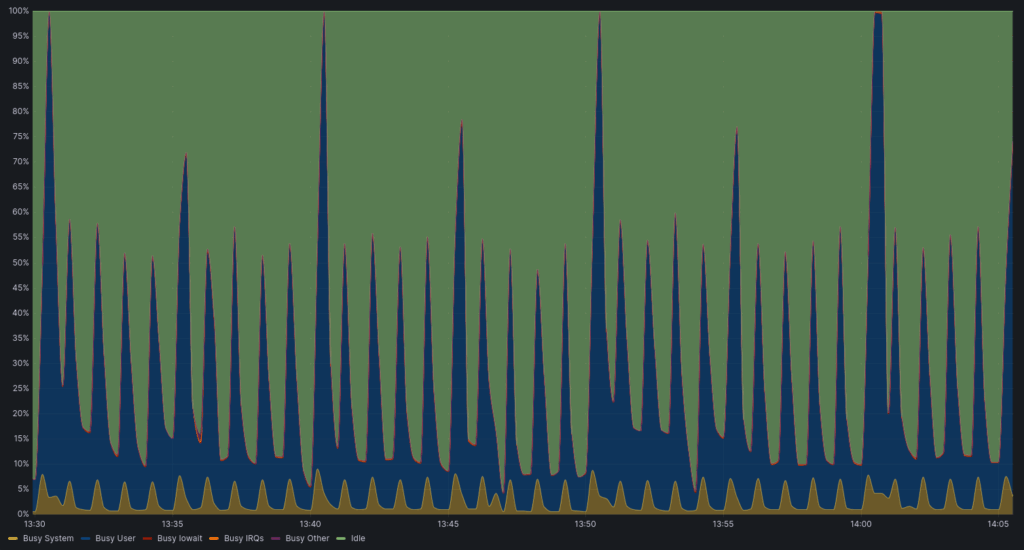

CPU Usage Pattern

CPU usage became more bursty – higher peaks for shorter durations – instead of broad, low plateaus. This meant more efficient CPU utilization.

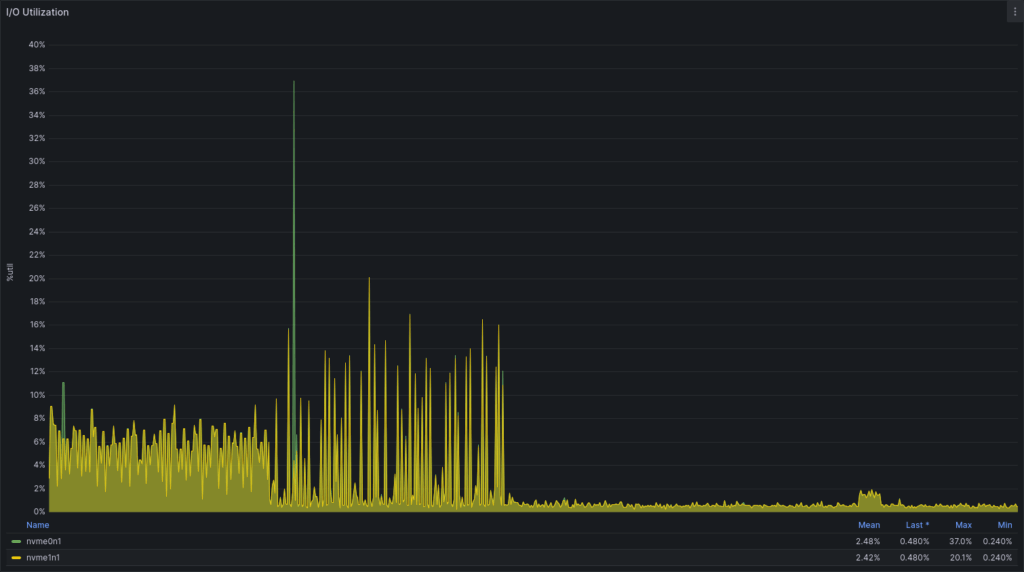

IO Reduction

There was also a noticeable drop in disk I/O between 14:00 and 18:00 on the same day.

Analysis

The overhead wasn’t due to Docker itself, but rather the misuse of Docker. Each docker-compose run triggers:

- A new

runcprocess - Namespace creation

- Storage mount via

overlayfs - Forking and container configuration

- Finally,

execof the target command

These steps consume significant resources and add latency before the actual job starts.

Conclusion

If you’re seeing unexplained server load spikes after containerizing cron jobs, consider avoiding docker run or docker-compose run for each task. Use a long-lived container and run jobs inside it with docker exec. This dramatically reduces overhead and improves CPU and IO efficiency.